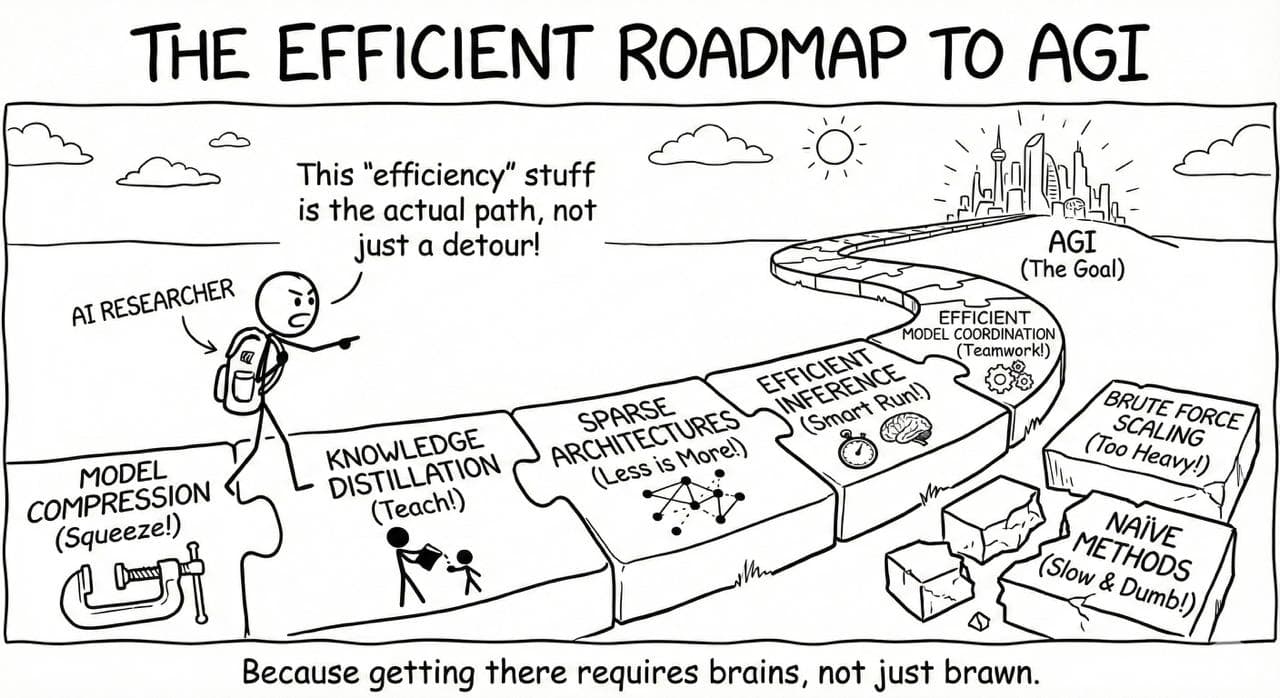

The Future of AI is Compact

Scaling Intelligence, Cutting Cost.

Our research group is dedicated to pioneering techniques — from sparse efficient architectures to scalable inference paradigms — that make large language models accessible and deployable on commodity hardware.

Sponsors

We are grateful to our sponsors who help us drive innovation in efficient large language models.

Research Areas

We focus on the intersection of theoretical efficiency and practical deployment.

Recent Publications

See our latest breakthroughs in model compression and inference.

Get Updates

Stay up-to-date with new papers, tool releases, and research updates.

Drive the Next Wave of Efficient AI.

We are always looking for passionate researchers, engineers, and PhD students. Join our highly collaborative environment.