Efficient Scaling Laws

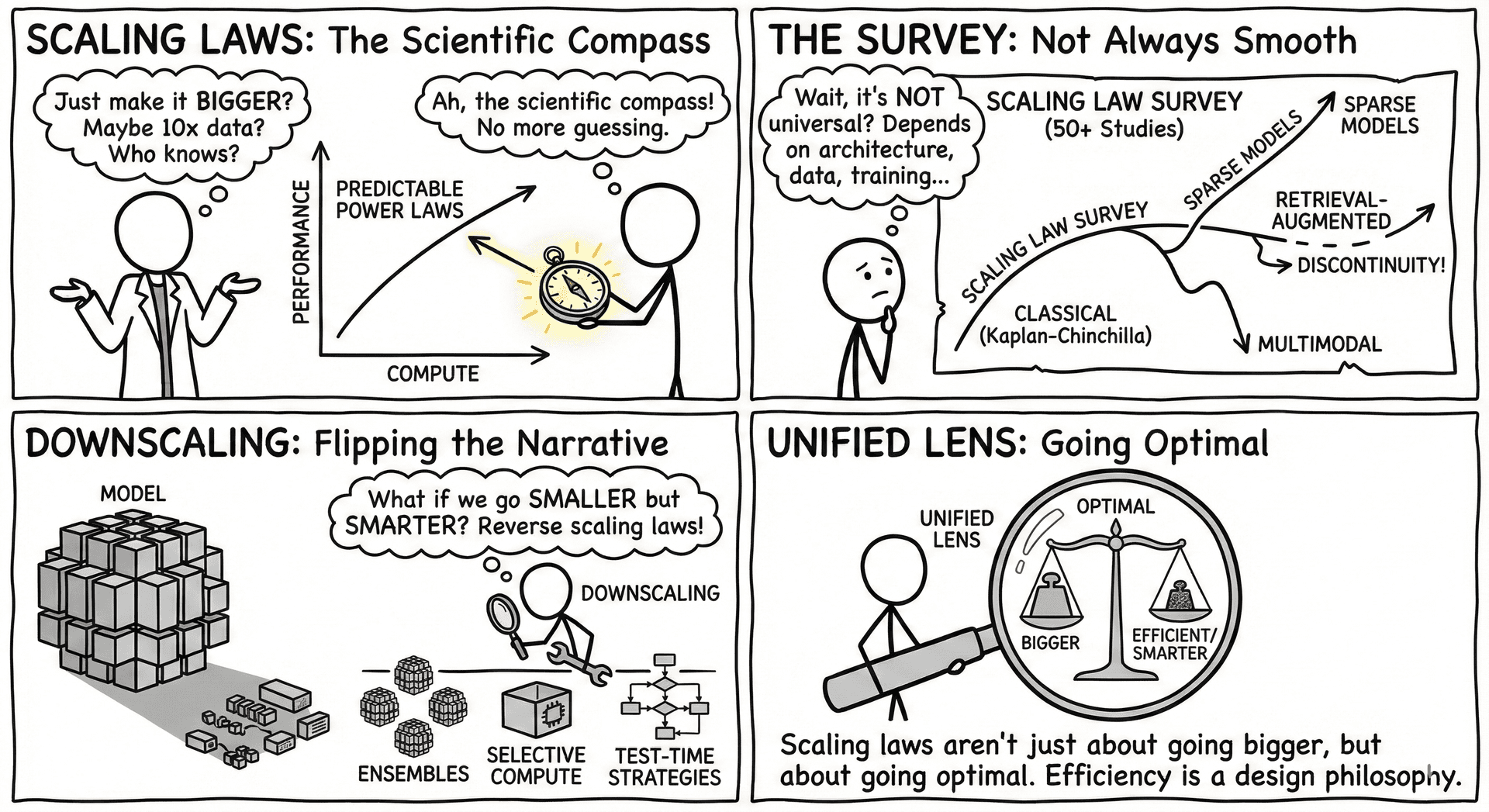

Scaling laws give us a scientific compass for navigating model design. Instead of guessing how big a model should be—or how much data it needs—we rely on empirical laws that relate parameters, data, compute, and performance through predictable power-law trends. These laws reveal where scaling helps, where it saturates, and where we are wasting compute.

The Scaling Law Survey synthesizes more than 50 studies showing that scaling behavior depends on architecture, data quality, training strategy, and inference procedures. It highlights that scaling laws are not universal: sparse models, retrieval-augmented LMs, multimodal encoders, and efficient variants often break classical Kaplan–Chinchilla predictions. The survey maps these regimes and provides practical guidance on when to expect smooth power laws—and when to expect discontinuities or inflection points.

The Downscaling work flips the narrative: rather than endlessly making models larger, it analyzes how to shrink models while preserving capability. It introduces early theoretical foundations for reverse scaling laws, showing how ensembles of small models, selective compute allocation, and test-time strategies can match or exceed the performance of a single large LLM under the same compute budget. Downscaling reveals that efficiency is not a post-hoc patch, but a scalable design philosophy.

Together, these works provide a unified lens: scaling laws are not just about going bigger, but about going optimal.

When to Use Which Paper

-

Scaling Law Survey - Use when you need a global map of how parameters, data, inference compute, and architectures scale—and where classical assumptions break.

-

Downscaling - Use when designing compute-efficient systems that rely on small or modular models, or when coordinating multiple experts under fixed compute budgets.

Why This Matters

Efficient scaling laws help answer the questions that truly matter in modern LLM development:

- How large should a model be for a given dataset and compute budget?

- When should we scale model size vs dataset size vs training length?

- When is it better to scale down and use multiple small models instead of one large one?

- How do architectural choices (MoE, sparse layers, state-space models) alter scaling dynamics?

- How does inference compute interact with training scale?

By grounding scaling decisions in theory and empirical regularities, these works help avoid overtraining, overspending, and overbuilding—pushing us toward a future where capability grows efficiently, not just expensively.