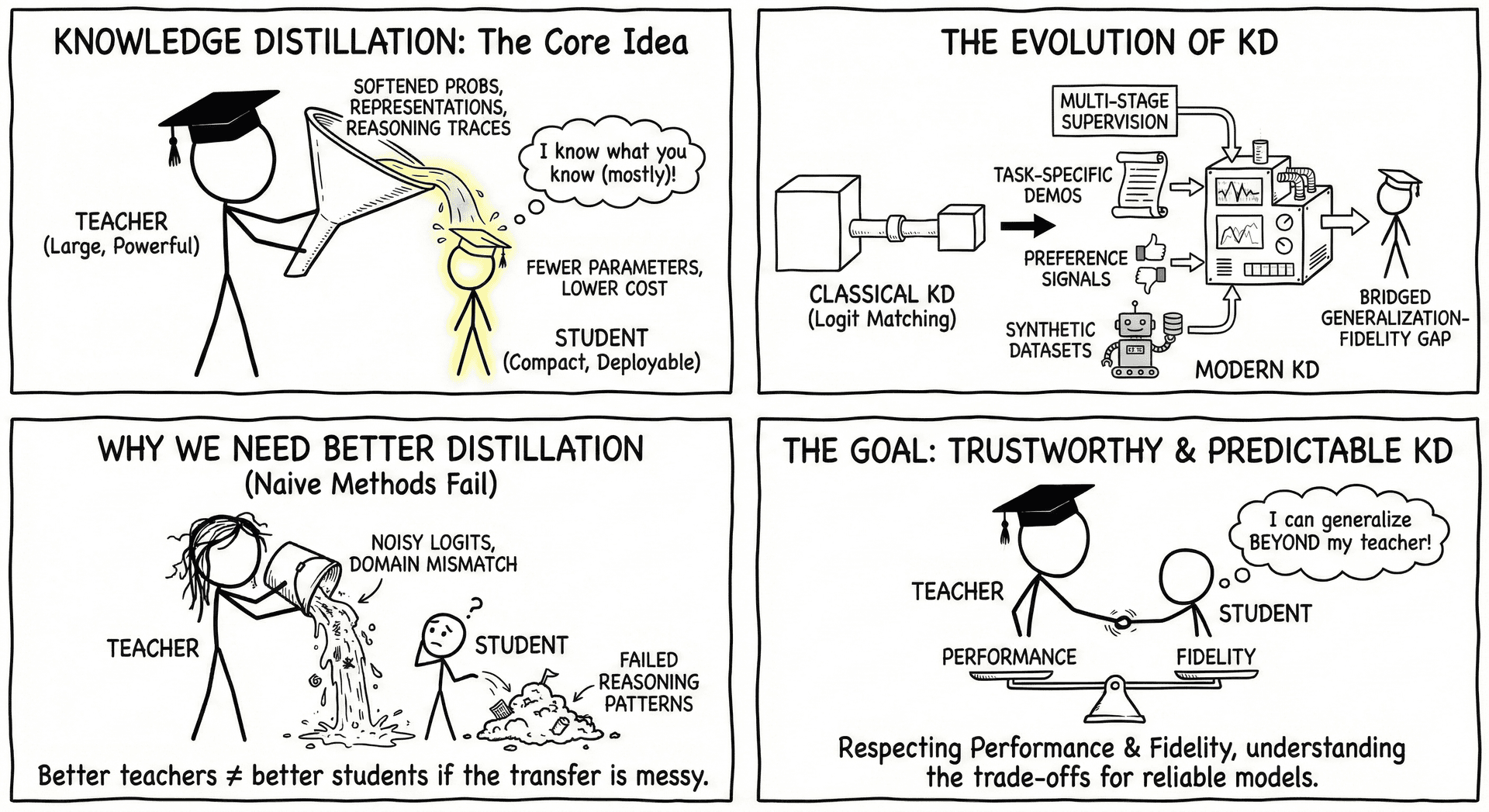

Knowledge Distillation

Knowledge distillation is a central technique in efficient model development, enabling researchers to transfer the capabilities of large, high-performance models into smaller, more deployable ones. At its core, distillation frames learning as a teacher–student paradigm: a powerful teacher model generates softened probability distributions, intermediate representations, or reasoning traces that guide a compact student model during training. This process allows the student to approximate the teacher’s behavior while requiring significantly fewer parameters and lower inference cost. In the context of large language models, distillation has evolved far beyond classical logit matching—modern approaches incorporate multi-stage supervision, task-specific demonstrations, preference signals, and even synthetic datasets generated by foundation models themselves. These methods help bridge the generalization–fidelity gap, ensuring that student models retain both the robustness and nuanced behavior of their teachers.

Why We Need Better Distillation

- Small and medium models benefit enormously from KD, but naïve methods fail to preserve the teacher’s reasoning patterns.

- Better teachers do not always yield better students; domain mismatch or noisy logits can severely harm performance.

- Traditional KD assumes “copying the teacher” is optimal, but real-world tasks require students that can generalize beyond their teachers.

To make KD trustworthy and predictable, we need methods that respect both performance and fidelity—and understand the trade-offs between them.

How Our Works Connect the Dots

-

MPDistil — Meta-Policy Distillation, Reimagines KD as a co-evolutionary game: a student, a teacher, and a meta-teacher jointly optimize a shared utility. Students learn curricula that help them surpass the teacher, while the meta-teacher shapes hidden representations. MPDistil shows that effective KD is not unidirectional—it is collaborative, competitive, and dynamic.

-

Generalization vs Fidelity Paradox Demonstrates that KD improves accuracy for small models but does not guarantee faithfulness to teacher reasoning. Students may perform better while agreeing less with the teacher and following different rationale patterns. This establishes KD as a trade-off mechanism: you transfer competence, not cognition.

Together, these works reposition distillation as more than compression—it becomes a controlled transformation of a model’s decision process.

Practical Guide — When to Use Which Method?

- If you want students that outperform their teachers → Use MPDistil (curriculum + meta-learning).

- If you want to understand whether KD preserves reasoning → Use the Paradox paper’s metrics (agreement, fidelity, rationale similarity).

- If evaluating teacher quality or temperature effects → Refer to the Paradox paper’s findings on noise sensitivity and domain adaptation.

- If designing multi-task or curriculum-based KD → Use MPDistil for joint optimization of teacher and student.

Big Picture

Knowledge distillation is not a mechanical transfer—it is an intervention in how models think. MPDistil shows how to make that intervention constructive and self-improving; the Generalization–Fidelity Paradox shows why such sophistication is necessary. Efficient KD, therefore, is about cultivating students that are strong, reliable, and strategically aligned—not merely compressed replicas of their teachers.