Model Coordination

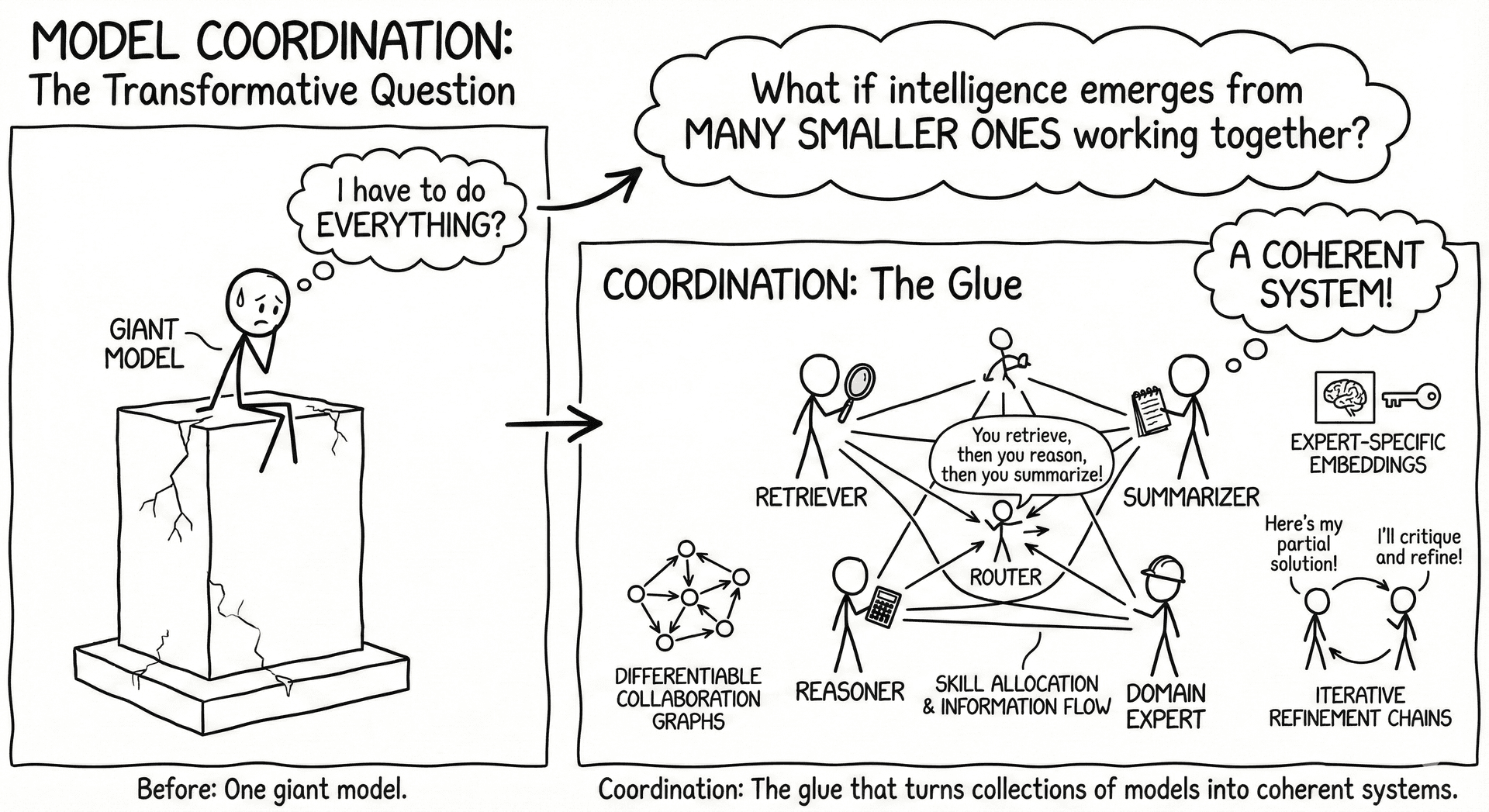

Model coordination asks a simple but transformative question: what if intelligence emerges not from one giant model, but from many smaller ones working together? As LLM ecosystems move toward modular, multi-agent, and expert-driven workflows, coordination becomes the glue that turns collections of models into coherent systems.

At its core, coordination is about skill allocation and information flow. Different models—or different versions of the same model—specialize naturally: some reason well, some retrieve well, some summarize crisply, others excel at domain-specific logic. Coordination mechanisms allow these specialized models to interact without stepping on each other’s toes. Techniques such as differentiable collaboration graphs, expert-specific embeddings, lightweight routers, and iterative refinement chains allow models to exchange partial solutions, critique each other, and stabilize each other's errors.

Practical Guidelines

- Use coordination when tasks decompose naturally For multi-step reasoning, iterative refinement chains often outperform a single forward pass.

- Use coordination when combining diverse strengths Retrieval-heavy tasks, code generation, and safety-critical workflows benefit from ensembles of specialized experts.

- Use coordination when scaling down, not up Downscaling laws suggest that multiple 1B–3B models can match or surpass a single large model if coordinated well.

- Avoid coordination when latency budgets are extremely tight Multi-call systems incur overhead; single-pass models may be preferable for real-time constraints.

Model coordination shifts the paradigm: instead of one model trying to do everything, several efficient models work together to do better.